Using sound to model the world | MIT News

Envision the booming chords from a pipe organ echoing by means of the cavernous sanctuary of a substantial, stone cathedral.

The seem a cathedral-goer will hear is influenced by a lot of variables, including the place of the organ, exactly where the listener is standing, no matter whether any columns, pews, or other road blocks stand concerning them, what the walls are produced of, the locations of windows or doorways, and so on. Hearing a sound can enable another person imagine their setting.

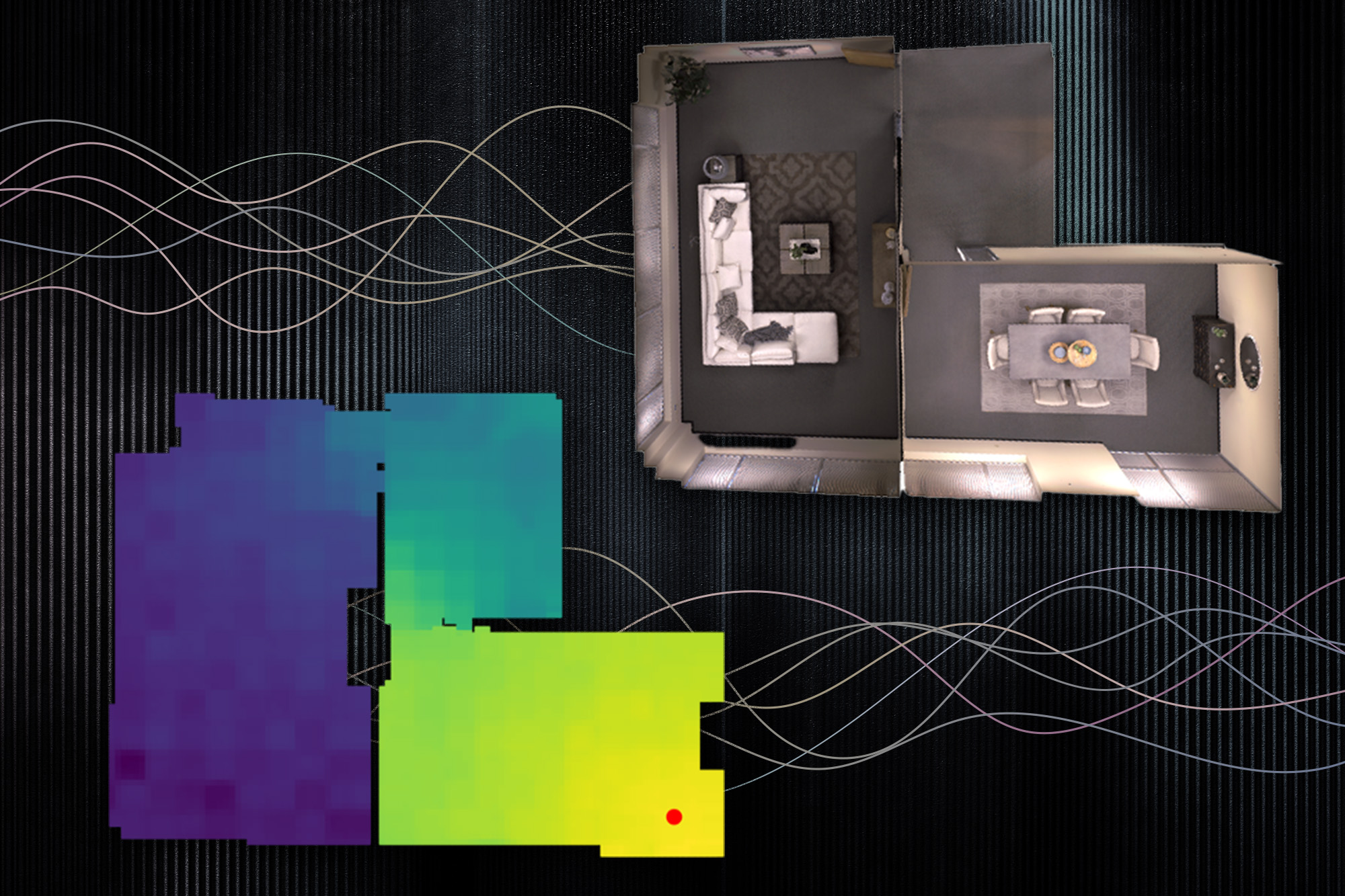

Scientists at MIT and the MIT-IBM Watson AI Lab are discovering the use of spatial acoustic data to assist devices improved envision their environments, too. They made a equipment-discovering design that can capture how any seem in a space will propagate by means of the area, enabling the model to simulate what a listener would hear at various locations.

By properly modeling the acoustics of a scene, the program can discover the fundamental 3D geometry of a room from audio recordings. The scientists can use the acoustic facts their process captures to make precise visual renderings of a place, equally to how human beings use audio when estimating the houses of their actual physical environment.

In addition to its likely apps in virtual and augmented reality, this procedure could assist artificial-intelligence agents establish far better understandings of the world close to them. For instance, by modeling the acoustic homes of the seem in its environment, an underwater exploration robotic could feeling issues that are farther absent than it could with eyesight on your own, says Yilun Du, a grad college student in the Office of Electrical Engineering and Pc Science (EECS) and co-writer of a paper describing the product.

“Most scientists have only focused on modeling vision so significantly. But as individuals, we have multimodal perception. Not only is vision important, audio is also critical. I think this get the job done opens up an exciting research way on far better utilizing audio to model the globe,” Du says.

Joining Du on the paper are direct creator Andrew Luo, a grad university student at Carnegie Mellon University (CMU) Michael J. Tarr, the Kavčić-Moura Professor of Cognitive and Mind Science at CMU and senior authors Joshua B. Tenenbaum, professor in MIT’s Section of Brain and Cognitive Sciences and a member of the Computer Science and Synthetic Intelligence Laboratory (CSAIL) Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Laptop or computer Science and a member of CSAIL and Chuang Gan, a principal study staff members member at the MIT-IBM Watson AI Lab. The investigate will be offered at the Meeting on Neural Details Processing Systems.

Audio and eyesight

In laptop or computer eyesight exploration, a sort of equipment-finding out product known as an implicit neural illustration product has been employed to produce easy, continuous reconstructions of 3D scenes from illustrations or photos. These styles benefit from neural networks, which incorporate levels of interconnected nodes, or neurons, that procedure information to complete a endeavor.

The MIT scientists employed the identical form of design to seize how audio travels constantly as a result of a scene.

But they located that vision models profit from a assets identified as photometric regularity which does not utilize to sound. If a single looks at the similar item from two distinctive places, the object seems about the exact. But with seem, alter spots and the audio 1 hears could be wholly distinctive because of to obstacles, length, and so forth. This makes predicting audio pretty challenging.

The scientists overcame this problem by incorporating two qualities of acoustics into their product: the reciprocal nature of audio and the influence of community geometric attributes.

Audio is reciprocal, which means that if the resource of a audio and a listener swap positions, what the human being hears is unchanged. Furthermore, what 1 hears in a individual area is heavily motivated by area functions, this kind of as an obstacle amongst the listener and the source of the sound.

To incorporate these two variables into their model, identified as a neural acoustic industry (NAF), they augment the neural community with a grid that captures objects and architectural functions in the scene, like doorways or partitions. The design randomly samples points on that grid to discover the features at particular places.

“If you visualize standing around a doorway, what most strongly impacts what you listen to is the existence of that doorway, not necessarily geometric functions far absent from you on the other facet of the space. We observed this facts enables greater generalization than a straightforward absolutely connected network,” Luo states.

From predicting sounds to visualizing scenes

Scientists can feed the NAF visible facts about a scene and a several spectrograms that exhibit what a piece of audio would seem like when the emitter and listener are situated at concentrate on places all over the room. Then the model predicts what that audio would seem like if the listener moves to any stage in the scene.

The NAF outputs an impulse response, which captures how a seem really should improve as it propagates via the scene. The researchers then use this impulse response to various sounds to listen to how those people appears must change as a man or woman walks by way of a room.

For instance, if a tune is enjoying from a speaker in the middle of a home, their design would exhibit how that seem receives louder as a individual techniques the speaker and then gets muffled as they wander out into an adjacent hallway.

When the researchers in comparison their method to other procedures that design acoustic information and facts, it created more accurate seem versions in each individual circumstance. And since it acquired local geometric details, their product was equipped to generalize to new spots in a scene much superior than other procedures.

Moreover, they located that making use of the acoustic details their model learns to a personal computer vison model can lead to a superior visual reconstruction of the scene.

“When you only have a sparse established of sights, making use of these acoustic characteristics enables you to seize boundaries more sharply, for occasion. And maybe this is due to the fact to accurately render the acoustics of a scene, you have to capture the underlying 3D geometry of that scene,” Du claims.

The researchers strategy to go on improving the product so it can generalize to brand name new scenes. They also want to use this strategy to much more sophisticated impulse responses and more substantial scenes, these kinds of as entire buildings or even a town or town.

“This new technique might open up up new opportunities to generate a multimodal immersive encounter in the metaverse software,” provides Gan.

“My group has performed a whole lot of operate on employing machine-learning approaches to speed up acoustic simulation or design the acoustics of true-environment scenes. This paper by Chuang Gan and his co-authors is clearly a main stage forward in this direction,” states Dinesh Manocha, the Paul Chrisman Iribe Professor of Computer Science and Electrical and Personal computer Engineering at the University of Maryland, who was not associated with this operate. “In distinct, this paper introduces a great implicit representation that can capture how audio can propagate in authentic-planet scenes by modeling it working with a linear time-invariant procedure. This work can have a lot of purposes in AR/VR as effectively as genuine-earth scene comprehension.”

This function is supported, in component, by the MIT-IBM Watson AI Lab and the Tianqiao and Chrissy Chen Institute.